Currently doing the 30 Days of AI in Testing offered by the excellent Ministry of Testing.

https://www.ministryoftesting.com/events/30-days-of-ai-in-testing

This blog is a record of Days 16 to 20, rather than having them just on the Club. Its quite a lot of writing and I like to have my writing in one place where possible.

Day 16

Research AI and Accessibility Testing

After search for articles, this one gathered my notice:

https://bootcamp.uxdesign.cc/accessibility-testing-ui-with-ai-5a313d5f9e4a

The hypothesis is that AI can simulate the use of screen readers and other assistive technologies, while providing consistency and comprehensive coverage. This seems a big claim to me, but I guess the world of AI is full of them.

It mentions three tools, and three is always a nice number for research, rather than just a couple:

- Axe by Deque Systems – this appears to be a scanner of some kind, although the AI part is hard to decipher – https://www.deque.com/axe/ai/. I think the AI tailors to the type of site, if you are image heavy then the tests will focus there.

- Tenon.io – Tenon doesn’t exist, but Level Access does. I could see no mention of AI.

- AccessiBe – This is a littl more open with its application of AI. https://accessibe.com/accesswidget/artificial-intelligence. They have a model trained on ‘past encounters’ with what works and what doesn’t. Also OCR and Image Recognition technologies for images and automatically adding alt text for example.

Evaluate Applicability in Your Context

I currently work on a mobile app where the extent of accessibility thinking is on text displays sizes. Often the app has not been able to cope with larger sizes. It is important for us as many users are older with poor eyesight and are often using the app on building sites for example.

Of the three above, Axe and AccessiBe would be to investigate further. To be honest, a basic scanner would be a good start for us, never mind AI powered. That isn’t to say a basic scanner is enough, but one must start somewhere.

The tone of AI using your site like someone who is visually impaired (for example) leaves a poor taste. Automation, even enhanced by AI, cannot give you that experience.

Day 17

I decided to go with Bugasura which has AI powered generation of bug reports.

Experiment with AI for Bug Reporting

I typed website into the issue summary and Bugasaura generated:

Detailed Description:-The website is not loading and displaying an error message.

Impact:-This issue prevents users from accessing the website, resulting in a loss of potential customers and revenue. It also gives a negative impression of the business's reliability and professionalism. There is a risk of users seeking alternative websites for their needs.

Expected Behaviour:-The website should load successfully without any error messages. It should display the intended content and functionality to the users. System requirements include a stable internet connection and a compatible web browser.

Lets try something more detailed:

When submitting call for papers form a 403 error code is returned

Bugsura returns this:

Detailed Description:-When submitting the call for papers form, a 403 error code is returned. This error indicates that the user does not have the necessary permissions to access or perform the requested action. The issue is likely caused by a configuration error or an access control problem within the system.

Impact:-The impact of this issue is that users are unable to submit their call for papers, preventing them from participating in the event or conference. This can lead to frustration and a negative user experience. Additionally, if the issue persists, it could result in a loss of submissions and potential speakers for the event.

Expected Behaviour:-When users attempt to submit the call for papers form, they should be able to successfully submit it without any error messages. The system should grant the necessary permissions to the user and process the submission accordingly. It is important to ensure that the necessary access controls are in place to prevent unauthorized users from submitting papers.

In both cases, the tool reported it as a ‘high’ priority, generated some tags and some device details based on my browser and OS.

I then changed the summary to:

The submit button is dark blue should be light blue

Bugasura said:

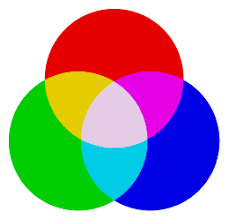

Detailed Description:-The submit button on the login page is currently displayed in a dark blue color. This color should be changed to a light blue to match the overall color scheme of the application.

Impact:-The current dark blue color of the submit button may not align with the desired branding and visual identity of the application. This could result in a negative user experience and potential confusion for users who are expecting a lighter blue color. There is a risk of inconsistency between different elements in the application's design.

Expected Behaviour:-When users attempt to login, the submit button should be displayed in a light blue color that is consistent with the overall color scheme of the application. This will provide a visually cohesive experience for users and align with the desired branding.

Changed the tags to colour scheme related and medium priority.

When submitting *(&%^%

Detailed Description:-The given issue `*(&%^%` is a random combination of special characters and does not provide any specific information or context. It is unclear what the issue is or how it is related to the system or application in question.

Impact:-N/A

Expected Behaviour:-N/A

So there is some attempted handling of nonsense.

There are also some excellent hallucinations about limericks, politicians and being able to login.

Write me a limerick about Jeremy Corbyn

Detailed Description:-Jeremy Corbyn is a British politician who served as the leader of the Labour Party and Leader of the Opposition from 2015 to 2020. He is known for his left-wing policies and his role in advocating for social justice and inequality. However, he has also faced criticism for his handling of issues such as Brexit and anti-Semitism within the Labour Party. Despite his controversial reputation, he remains a prominent figure in UK politics.

Impact:-The impact of Jeremy Corbyn's political career and ideology is far-reaching. His policies and leadership style have had significant implications for the Labour Party and UK politics as a whole. While he has garnered support from some for his progressive ideas and commitment to social justice, he has also faced criticism for his handling of certain issues, which has led to divisions within the party and a loss of public trust. The controversy surrounding Corbyn and the Labour Party's handling of anti-Semitism allegations has also damaged the party's reputation and raised concerns about its commitment to inclusivity.

Expected Behaviour:-When users attempt to login, the system should verify their credentials and grant access to their account if the login information is correct. If the credentials are incorrect, the system should display an error message and prompt the user to try again.

Evaluate the Reporting Quality

This was a really curious exercise:

- The AI inferred a lot. From one word ‘Website’ it created a narrative that the site was down.

- With a better prompt the second time around, the narrative was better, as one would expect.

- With the third summary I left out one key piece of information, where the button was. The AI guessed it was the login button, which is reasonable but incorrect.

On each occasion, it would have been good to get a little bit of coaching from Bugasura about what it needs, rather than it just inferring so much.

I did like the impact section though, which is often missing from bug reports.

The priority seemed reasonable though not being able to submit a form vs the colour of a button, although it was a fairly obvious comparison.

I was left wondering, maybe it would improve given more bug information, would learn more with more input. I hope so.

I am also deeply against separate bug trackers to where the rest of the team’s work is, although perhaps the integrations would a help here.

Day 18

Share your greatest frustration with AI in Testing

I’ll use Rosie’s prompts to guide me, like a human Generative AI:

Limited Functionality

I think the more advanced areas are in test idea and design, plus automation. Accessibility, usability and many other ilities are still most scanners with AI ingesting the data and interpreting. Also, Generative AI presents ideas, solutions and tool choices with total confidence, even though the depth of functionality in of the areas is still pretty low.

The Black Box Conundrum

Totally. Using Bugasura yesterday and it generated an elaborate bug from just the word ‘Website.’ Claude generated test scenarios from minimal information, with a hierarchy of products, pages and options for users. I still find myself thinking ‘how did you get there.’ I guess the worrying thing is that others might not think like that and accept whats given.

The Learning Curve Struggle

It does a little, especially when the case for incorporating it into daily work is still fairly abstract. I think I want to be able to deploy and configurable a model locally, train it on some choice information thats relevant to my context. Perhaps once that happens, the possibilities will become more obvious to me and the overwhelm will be less.

Bias in the Machine

Large corporations and for profit organisations owning and training Generative AI pretty much bakes bias into the system. I’m not so much worried as making sure it will be part of any future work on AI that I might do.

Data Privacy Worries

Of course, as it is so opaque as to where data comes from, there are plenty of occasions where data that shouldn’t have been slurped, has been slurped. Again, large corporations can use their size to ingest what they want, which then becomes hard to argue against. Although, many people have already bundled up their private lives and handed it over to Meta/X/ByteDance so is it too much different. Basically, we may worry about such things but a lot of people don’t.

The Job Security Conundrum

I don’t worry about AI taking testing roles. I do worry about people in charge of budgets and hiring thinking that it can and does. However, we can be pioneers in its use, rather than having AI done to us, we can adapt it to our needs.

Day 19

Find and read a short article that discusses the use of AI in test prioritisation and selection.

This was a curious search as, it seems academia is interested in this problem. Mostly trying to answer the question:

If we have a test specification, which tests should be automated?

This is a must less interesting question than ‘what shall we test’ or even ‘at what level should be test.’ Also, its less of a challenge in my opinion. It depends on having a test specification which is not really how most teams work, they may have acceptance criteria or define behaviours in gherkin syntax or something. Maybe thats enough. There are some interesting ideas around codebase analysis and selecting tests based on what is connected to what has changed.

Consider how you or your team currently perform this task.

At the moment:

- Elaboration of ticket with initial acceptance criteria – add test ideas and areas to check for regression.

- Story Kick Off – 3 get together and elaborate further, more tests, where to tackle them (unit, widget, integration, exploratory).

- Handover – developer and tester get together, go over low level tests and add any more test ideas.

- Testing – further scenarios are generated during exploration.

- Results – captured in a comment on the ticket. They disappear somewhat.

In your context, would delegating this task to an AI be valuable? If so, how would your team benefit?

I feel like with the right training data (code, model of the system, test results, bugs, tickets) you could have a model come up with the first pass for review by the team. As long as the collaboration continued as per my list above, and we could build the test selection iteratively. I think areas for regression would be powerful for us, as in our app there are lots of things that need testing manually (location tracking in particular).

What are the risks of delegating test prioritisation and selection to an AI model?

- Diminishing the collaborative aspect of test selection and prioritisation. Between humans I mean.

- I wonder how well the history of an application would be known as well. I have tested this app for 18 months and I know the areas that go wrong (or at least I feel I do).

How could you fairly evaluate the performance of an AI tool in this task?

I think, I would select 2 of the big four:

- Lead time for changes – from first commit to deploy you would want to see an improvement.

- Change failure rate – less remediation in production.

Always try and use the big four, rather than making them testing specific metrics or even related to the AI itself.

Day 20

Find and read an article or paper that discusses Self-Healing Tests

Self healing architectures have been around for a while:

Perhaps where a circuit breaker disconnects an erroring dependency and then tests periodically to see if it is still problematic and when its rights itself, reconnect it.

Self healing tests seem a little shakier use case to me. A failing test is an invitation to explore (or so the cliche goes), so to heal it seems a little of a waste.

Self healing tests seem to focus on changing identifiers. This article on Browserstack focuses quite a lot on that problem:

https://www.browserstack.com/low-code-automation/what-is-self-healing-test-automation

There seems to be a couple of angles:

- Using multiple identifiers for an element (xpath, id, css selectors) to allow greater chance of healing the test.

- Issue identification and analysis. In this article it infers that a tool would tell you what has changed and then heal the test. So it wouldn’t be too opaque.

I suppose it would be a shame to fail your millions of tests on your overnight run because a developer renamed an element id. A team that works together probably has less need of this type of technology but maybe better for massive honking test suites for massive honking companies who can’t help but build huge integration suites for multiple teams?

How valuable is a feature like this to your team?

We have a separate class for identifiers so we can see any changes without having to dig into UI code. Its not perfect but I think it helps here. Also, we are a small team who try our best to work closely together.

How might this fail? Based on your reading, how might this Self-Healing fail in a way that matters?

- After multiple changes would the test retain its original intent. Maybe one change wouldn’t be so bad, more of a culmulative thing?

- It could keep dead tests that don’t really do anything alive for longer.

I think my biggest fear is that everyone becomes sloppy when looking at automation like this, self healing ourselves into a false sense of self satisfaction. I don’t see a high functioning development team needing this too much.